This June, the Environmental Protection Agency (EPA) issued an Advance Notice of Proposed Rulemaking to announce its request for public input on whether and how to change the way it considers costs and benefits in making regulatory decisions. Of particular interest to EPA and the public is the figure known as the social cost of carbon (SCC).

The SCC is the estimated marginal external cost of a unit emission of carbon dioxide, based upon the future damages (such as reduced agricultural productivity, increased flood damage, or worsened health and mortality) that that unit will inflict through its contribution to the greenhouse effect and the global warming that results. The metric, trenchantly described by Obama economic advisor Michael Greenstone as the “the most important number you’ve never heard of,” is the lynchpin of myriad climate-related regulations and carbon tax proposals. Given the Supreme Court’s 2007 directive to EPA to evaluate carbon dioxide and EPA’s subsequent finding that it qualifies as a pollutant, calculations of the SCC will have far-reaching consequences. As a point of entry to the stark disagreements on the topic, compare the Trump administration’s current estimate of the SCC of $5 per ton to its predecessor’s estimate of over $40. What with the United States’ annual carbon dioxide output of around 6 billion metric tons of carbon dioxide equivalent, incorporating one estimate instead of the other into the calculation of a regulatory proposal’s costs and benefits all but determines the likelihood of the proposal’s adoption.

Given this significance, it is unfortunate that Greenstone’s observation about the SCC’s obscurity rings true. To make matters worse, even when the concept is known, it is all too frequently misrepresented. The central misunderstanding of the social cost of carbon is that it is a figure existing in nature independent of human judgment, akin to the atomic mass of nickel or the effective gravity at Earth’s equator. On the contrary, the social cost of carbon is an estimate that rests upon normative judgments and assumptions supplied by the modeler. The public, however, is often led to think otherwise.

Consider, for example, Huffington Post’s Alexander C. Kaufman, who describes the social cost of carbon as “a calculation of the damages to property, human health, economic growth and agriculture as a result of climate change.” The SCC utilizes damage projections, yes, but damages and an SCC are not synonymous. Rather, the SCC is an estimate of future damages on a selected timescale translated at a selected rate into present dollar terms. And while Kaufman acknowledges that there is a wide range of estimates for the metric, he still misleads readers by implying that we will soon have the advantage of a scientifically-valid figure. “Under Obama, the EPA estimated the social cost of carbon to be between $11 and $105 per ton of carbon dioxide pollution. But the real cost,” Kaufman writes, “could be much higher, according to a study Purdue University published last year, which found that existing models relied on decades-old agricultural data.” Kaufman’s treatment is typical of mainstream coverage of this issue and does our public discourse a disservice.

With this article I do not intend to endorse any particular view of the SCC nor describe it in full, but rather to bring to the reader’s attention to a trio of factors—and the complex normative considerations therein—that, along with others, determine an SCC estimate.

Integrated Assessment Models

Integrated Assessment Models (IAMs) are the tools with which we analyze the potential effects—positive and negative—of increased carbon dioxide emissions. IAMs link climate projections with projections of economic activity to predict and monetize welfare impacts. The two most prominent IAMs are FUND, developed by Richard Tol, and DICE, developed by William Nordhaus.

Using the IAMs, modelers can provide an estimate of the marginal cost to the economic system as a whole of emitting a ton of carbon dioxide. But, crucially, even if we assent to the climate projections utilized and trust the economic variables selected by the developers to adequately capture economic costs and benefits in the future, in order to understand the resulting SCC we need to grasp two additional instrumental variables: the discount rate and the time horizon.

Discount Rate

The discount rate is a concept that seeks to translate future costs and benefits into present dollar terms. As described by Nordhaus, “discounting is a factor in climate-change policy—indeed in all investment decisions—that involves the relative weight of future and present payoffs.” The discount rate is in essence the relative importance one places on costs and benefits that will arise in the future as compared with costs and benefits today. Regarding global warming, the most adverse effects will occur far in the future, but the cost of regulating emissions occurs in the present. The discount rate addresses the question, how much benefit (or averted damage) do we require in the future to prompt us to take on a cost now?

The first element of a discount rate with which to reckon is what is known as pure rate of time preference. Pure rate of time preference is the weighing of import one places on present consumption versus the future consumption as such. Some climate analyses, such as the “Stern Review on the Economics of Climate Change,” performed for the British government last decade, operate on a premise of intergenerational neutrality—essentially holding that we ought value ourselves today no more than ourselves in the future nor more than the generations of humans that will follow us. This time-preference orientation can result in a near-zero discount rate. And though it may sound sensible at first blush, when we consider this approach from the perspective of investment the logic crumbles. In contemplating the appropriate relationship between the future and the present we ought to take into account the broader considerations that govern intertemporal decision-making, beginning with rate of growth and the opportunity cost of capital. As Nordhaus explains:

“(The discount rate) is a positive concept that measures the relative price of goods at different points of time. This is also called the real return on capital, the real interest rate, the opportunity cost of capital, and the real return. The real return measures the yield on investments corrected by the change in the overall price level. In principle, this is observable in the marketplace.”

This approach to the discount rate is sometimes referred to as the financial-equivalent discount rate and tracks with the rate of return one can expect from the market, which averages about 7 percent annually. The upside this presents, to draw the reader’s attention back to the climate issues at hand, is that it prioritizes wealth accumulation—our best buffer against climate risk. The downside is that it may heighten that very risk for our future selves and our offspring.

It is thus between these two extremes that the discount debate transpires: from intergenerational neutrality on the one hand to the opportunity cost of capital on the other. The Obama Interagency Working Group on the Social Cost of Carbon chose to hover in the middle, presenting an SCC at 2.5-, 3- and 5-percent discount rates, but ignoring longstanding Office of Management and Budget guidance to present the SCC at a 7-percent discount rate.

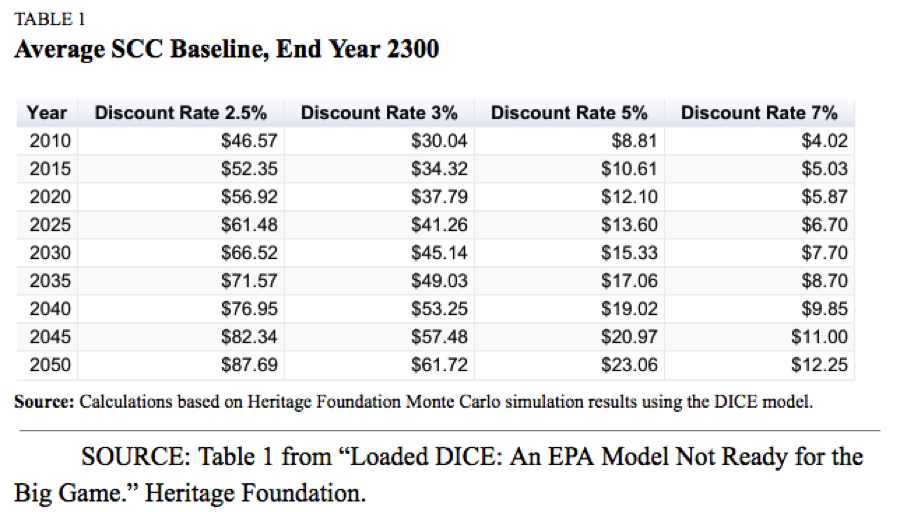

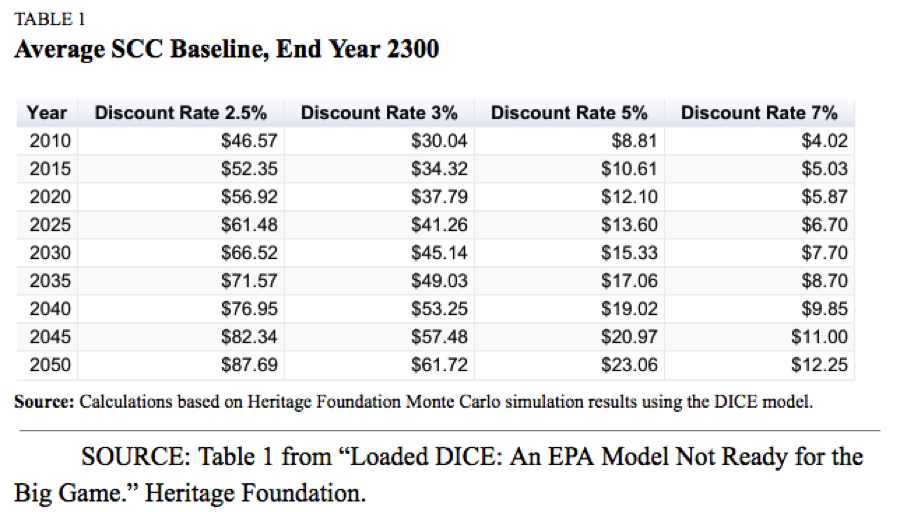

To observe the magnitude of divergence created by simply changing the discount rate, examine the table below. According to calculations based on simulation results using Nordhaus’s DICE model (one of the three IAMs used by the Obama administration), the SCC fluctuates by a nearly factor of ten in the year 2020 when comparing the 2.5-percent discount rate result of $56.92 with the 7-percent rate result of $5.87.

As if this pursuit were not yet fraught enough with uncertainty, theorists also incorporate factors such as the rate of risk aversion and the income elasticity of the value of climate change impacts. Others seek to augment these factors with distributional considerations.

In a recent New York Times article, Brad Plumer offered one of the more thorough treatments of the SCC we have seen from the mainstream media, including even a discussion of the discount rate. “The federal government,” Plumer writes, “has long recommended discount rates of 3 percent and 7 percent for valuing costs and benefits across a single generation. But some economists have argued that higher rates are inappropriate for thinking about long-range problems like global warming, where today’s emissions can have impacts, like melting ice sheets, that reverberate for centuries.”

Plumer’s choice to introduce his readers to the discount rate is praiseworthy, but his commentary, like that of Huffington Post’s Kaufman, still lacks appreciation for the subjectivity of pure rate of time preference and, ergo, the discount rate.

For a final word on the discount rate, I will turn to Lawrence Goulder and Roberton Williams’ consideration of the dilemma in their 2012 discussion paper, “The Choice of Discount Rate for Climate Change Policy Evaluation.” In response to the aforementioned Stern Review, Goulder and Williams argue that “the disagreements about the discount rate are not merely arguments about empirical matters; there are major debates about conceptual issues as well.”

Time Horizon

The second concept, closely related to that of the discount rate, with which we must grapple is the time horizon. This concept is simpler than that of the discount rate in many respects, but its impact is just as profound. IAMs generate multi-century simulations with some stretching to the next millennium.

When taking note of the time horizon, I find that the discounting issue—particularly the danger of a low one—is brought into sharper relief. People tend to value their children’s and grandchildren’s lives to an equal or greater extent than their own, but upon some branch on one’s tree of descendants the capacity for rational concern necessarily wanes. Again, Nordhaus:

This approach is more difficult to interpret when it involves different generations living many years from now, and it arises with particular force when the current generation’s great(n)-grandchildren consume goods and services that are largely unimagined today. These will almost certainly involve unrecognizably different health-care technologies, with supercomputers cheap enough and small enough to fit under the skin, and future generations that grow up and adapt to a world that is vastly different from that of today.

It thus becomes quite plain when we contemplate the long reaches of time that intergenerational neutrality is a non-starter. Human beings alive today deserve more consideration in forming public policy than hypothetical future members of our species. Otherwise, the benefits and damages we expect to see from climate change in the near term get utterly overwhelmed by damages centuries into the future. As Nordhaus describes in his assessment of the Stern Review:

“The effect of low discounting can be illustrated with a ‘wrinkle experiment.’ Suppose that scientists discover a wrinkle in the climate system that will cause damages equal to 0.1 percent of net consumption starting in 2200 and continuing at that rate forever after. How large a one-time investment would be justified today to remove the wrinkle that starts only after two centuries? Using the methodology of the Review, the answer is that we should pay up to 56 percent of one year’s world consumption today to remove the wrinkle. In other words, it is worth a one-time consumption hit of approximately $30,000 billion today to fix a tiny problem that begins in 2200. It is illuminating to put this point in terms of average consumption levels. Using the Review’s growth projections, the Review would justify reducing per capita consumption for one year today from $10,000 to $4,400 in order to prevent a reduction of consumption from $130,000 to $129,870 starting two centuries hence and continuing at that rate forever after.”

The time horizon of IAM simulations, when paired with a suspiciously low discount rate, pushes the concept of the social cost of carbon to the very precipice of arbitrariness and capriciousness.

Global vs. Domestic Costs and Benefits

The third and final contributor to SCC figures that I will address is exclusion or inclusion of foreign benefits and damages. An element of cost-benefit analysis that has been ruefully opaque is the question of cost and benefits to whom? The costs that a warming planet would entail would not be distributed evenly within countries, let alone between them. Indeed, some regions of the globe would stand to gain from the greening effect of increased carbon dioxide concentrations and the longer growing season promoted by warmer temperatures. A fundamental rift between the respective approaches of the Obama and Trump administrations is that the former opted for a global estimate, while the latter prefers to focus on the domestic costs and benefits. The Obama estimate, largely as a result of this difference in approach, is much higher

As Plumer described in his New York Times article:

“First, the E.P.A. took the Obama-era models and focused solely on damages that occurred within the borders of the United States, rather than looking at harm to other countries as well. That change alone reduced the social cost of carbon estimate to around $7 per ton.

The reasoning was simple: If Americans are paying the cost of these rules to mitigate climate change, then only benefits that accrue to Americans themselves should be counted.”

But this issue is not as simple as a wealthy global north enriching itself at the expense of a poor global south. Some of the developing countries that face the gravest risks from future climate damages also reap reward in the near term from direct emissions benefits. As described by David Anthoff, Richard Tol, and Gary Yohe in “Discounting for Climate Change (2009)”:

“One of the basic results of the climate change impact literature is that poor countries tend to be more vulnerable to climate change, and the results in Figure 3 certainly reflect this. With zero income elasticities, the SCC tends to be higher – at least, if the discount rate is low. If the discount rate is high, the SCC is negative (that is, climate change is a net benefit); and with zero income elasticities, the SCC is even more negative (that is, climate change is an even greater benefit). The reason can be found in the positive impacts of carbon dioxide fertilization on agriculture in poor countries.”

So while it is the case that the poorer countries of, say, south and southeast Asia will bear consequences of a warmer, wetter world in the future, they also stand to make some gains in the near term from the direct positive impact of carbon dioxide fertilization on agricultural yields. At a high discount rate models can even generate a negative social cost of carbon, implying that carbon emission should not be taxed, but subsidized. For a more comprehensive discussion of the federal government’s approach to global and domestic costs and benefits with respect to climate change, see the work of Ted Gayer and W. Kip Viscusi.

Ultimately, as with the discount rate, one’s answer to the global vs. domestic question relies on his normative premises. If, like I do, one opts to include global costs and benefits in an evaluation of the issue as a matter of justice, it is nevertheless appropriate to make that choice transparent to the American public, who would bear immediate costs of any federal regulatory action that follows.

Conclusion

The purpose of this article is not to endorse any specific calculation of a social cost of carbon nor offer an exhaustive evaluation of methodology. Rather it is to highlight the degree of individual discretion involved in crafting an estimate. The social cost of carbon is not a metaphysically-given figure; it is a figure contingent upon the particular normative perspective one chooses to favor. Depending upon the discount rate—itself contingent upon estimations of rate of time preferences, growth rates, and income elasticity—the time horizon, and the choice to exclude or include global effects, one can generate virtually any SCC he wants

In performing cost-benefit analyses our government has a responsibility to present the fullest view to the public that is possible. In the context of climate change, that means exploring the social cost of carbon at a wide range of discount rates, on a diversity of time horizons, and showing both the domestic and the global consequences

The post The Social Cost of Carbon: Considerations and Disagreements in Climate Economics appeared first on IER.

Recently, new internal analysis of our work here at Fractl has yielded a fascinating finding:

Recently, new internal analysis of our work here at Fractl has yielded a fascinating finding: